1. 配置ip 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 nmtui readonly cluster_float_ip="192.168.29.100" vim /etc/hosts 添加自己定义的静态ip

2. 初始化配置 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 swapoff -a echo "bridge" | tee /etc/modules-load.d/bridge.confecho "br_netfilter" | tee /etc/modules-load.d/br_netfilter.confchmod 755 /etc/modules-load.d/bridge.confchmod 755 /etc/modules-load.d/br_netfilter.confmodprobe bridge modprobe br_netfilter cat >> /etc/sysctl.conf << "EOF" kernel.sysrq=0 net.ipv4.ip_forward=0 net.ipv4.conf.all.send_redirects=0 net.ipv4.conf.default.send_redirects=0 net.ipv4.conf.all.accept_source_route=0 net.ipv4.conf.default.accept_source_route=0 net.ipv4.conf.all.accept_redirects=0 net.ipv4.conf.default.accept_redirects=0 net.ipv4.conf.all.secure_redirects=0 net.ipv4.conf.default.secure_redirects=0 net.ipv4.icmp_echo_ignore_broadcasts=1 net.ipv4.icmp_ignore_bogus_error_responses=1 net.ipv4.conf.all.rp_filter=1 net.ipv4.conf.default.rp_filter=1 net.ipv4.tcp_syncookies=1 kernel.dmesg_restrict=1 net.ipv6.conf.all.accept_redirects=0 net.ipv6.conf.default.accept_redirects=0 net.bridge.bridge-nf-call-iptables=1 net.ipv4.ip_forward=1 net.ipv6.conf.all.forwarding=1 net.bridge.bridge-nf-call-ip6tables=1 fs.inotify.max_user_watches = 524288 fs.inotify.max_user_instances = 512 net.ipv4.conf.default.rp_filter=0 net.ipv4.conf.all.rp_filter=0 net.ipv4.conf.lo.rp_filter=0 net.ipv4.conf.eno1.rp_filter=0 EOF sysctl -p

3. 获取依赖 注意,可能直接下载最新版本无法使用,可以尝试直接通过apt下载

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 wget https://github.com/opencontainers/runc/releases/download/v1.1.14/runc.amd64 install -m 755 runc.amd64 /usr/local/sbin/runc which runc wget https://github.com/containernetworking/plugins/releases/download/v1.5.1/cni-plugins-linux-amd64-v1.5.1.tgz mkdir -p /opt/cni/bintar Cxzvf /opt/cni/bin cni-plugins-linux-amd64-v1.5.1.tgz chown -R root:root /opt/cniwget https://github.com/containerd/containerd/releases/download/v1.7.22/containerd-1.7.22-linux-amd64.tar.gz tar Cxzvf /usr/local containerd-1.7.22-linux-amd64.tar.gz which containerd mkdir -p /etc/containerd/containerd config default > /etc/containerd/config.toml sed -i 's/SystemdCgroup = false/SystemdCgroup = true/g' /etc/containerd/config.toml wget https://raw.githubusercontent.com/containerd/containerd/refs/heads/main/containerd.service mkdir -p /usr/local/lib/systemd/system/cp containerd.service /usr/local/lib/systemd/system/containerd.servicesystemctl daemon-reload systemctl enable --now containerd systemctl status containerd

3. 获取k8s 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 wget https://github.com/kubernetes-sigs/cri-tools/releases/download/v1.31.1/crictl-v1.31.1-linux-amd64.tar.gz tar zxvf crictl-v1.31.1-linux-amd64.tar.gz -C /usr/local/bin which crictl crictl config runtime-endpoint unix:///var/run/containerd/containerd.sock crictl config image-endpoint unix:///run/containerd/containerd.sock apt-get update && apt-get install -y apt-transport-https curl -fsSL https://mirrors.aliyun.com/kubernetes-new/core/stable/v1.31/deb/Release.key | gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg echo "deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://mirrors.aliyun.com/kubernetes-new/core/stable/v1.31/deb/ /" | tee /etc/apt/sources.list.d/kubernetes.listapt-get update apt-get install -y kubelet kubeadm kubectl systemctl enable kubelet && systemctl start kubelet sudo apt-mark hold kubelet kubeadm kubectl

4. 配置k8s 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 mkdir -p /etc/sysconfig/cat > /etc/sysconfig/kubelet <<"EOF" Environment="KUBELET_EXTRA_ARGS=--fail-swap-on=false" EOF systemctl daemon-reload systemctl enable --now kubelet kubeadm config print init-defaults --component-configs KubeProxyConfiguration,KubeletConfiguration > kubeadm-init.yaml sed -i 's/\(kubernetesVersion: \).*/\1 1.31.1/g' kubeadm-init.yaml sed -i 's/\(advertiseAddress: \).*/\1 ' ${cluster_float_ip} '/g' kubeadm-init.yaml sed -i 's/\( name: \).*//g' kubeadm-init.yaml sed -i 's#\(serviceSubnet: \).*#\1 10.96.0.0/16,2001:db8:42:1::/112\n podSubnet: 10.244.0.0/16,2001:db8:42:0::/56#g' kubeadm-init.yaml sed -i 's/imageRepository: registry.k8s.io/imageRepository: registry.aliyuncs.com\/google_containers/g' kubeadm-init.yaml sed -i '/scheduler: {}/ a\controlPlaneEndpoint: cluster.svc' kubeadm-init.yaml sed -i '/memorySwap: {}/ a\failSwapOn: false' kubeadm-init.yaml kubeadm config images list --config=kubeadm-init.yaml crictl pull registry.aliyuncs.com/google_containers/pause:3.8 ctr -n k8s.io i tag registry.aliyuncs.com/google_containers/pause:3.8 registry.k8s.io/pause:3.8 apt install conntrack bash-completion ipvsadm socat net-tools iproute2 -y kubeadm init --config=kubeadm-init.yaml --upload-certs kubeadm token list kubeadm token create --print-join-command 输出: kubeadm join k8s-master-7-13:8443 --token fw6ywo.1sfp61ddwlg1we27 --discovery-token-ca-cert-hash sha256:52cab6e89be9881e2e423149ecb00e610619ba0fd85f2eccc3137adffa77bb0 kubeadm init phase upload-certs --upload-certs 输出: [upload-certs] Using certificate key: 4b525948d4d43573ac8ad6721ae6526994656fda0f41c58f5a34ab6086748273 kubeadm join k8s-master-7-13:8443 --token fw6ywo.1sfp61ddwlg1we27 --discovery-token-ca-cert-hash sha256:52cab6e89be9881e2e423149ecb00e610619ba0fd85f2eccc3137adffa77bb04 kubeadm join k8s-master-7-13:8443 --token fw6ywo.1sfp61ddwlg1we27 --discovery-token-ca-cert-hash sha256:52cab6e89be9881e2e423149ecb00e610619ba0fd85f2eccc3137adffa77bb04 --certificate-key 4b525948d4d43573ac8ad6721ae6526994656fda0f41c58f5a34ab6086748273--control-plane echo 'export KUBECONFIG=/etc/kubernetes/admin.conf' >> ~/.bashrcsource ~/.bashrcecho 'source <(kubectl completion bash)' >>~/.bashrcecho 'alias k=kubectl' >>~/.bashrcecho 'complete -o default -F __start_kubectl k' >>~/.bashrcsource ~/.bashrckubectl taint node ethereal-desktop node-role.kubernetes.io/control-plane:NoSchedule-

5. 配置网络 以下为cilium配置方案,如果想要使用kubevirt,推荐使用kube-ovn结合cilium的方案。详见blog->《kubevirt入门》

1 2 3 4 5 6 7 8 9 10 11 12 13 wget https://github.com/cilium/cilium-cli/releases/download/v0.16.18/cilium-linux-amd64.tar.gz tar xzvfC cilium-linux-amd64.tar.gz /usr/local/bin cilium version --client cilium install --version 1.16.1 cilium config set ipam kubernetes cilium config set enable-ipv4 true cilium config set cluster-pool-ipv4-cidr 10.96.0.0/16 cilium config set enable-ipv6 false cilium status --wait

6. 配置本地存储(注意跳过调度步骤的pod无法自动创建PV) 1 2 3 4 5 6 wget https://raw.githubusercontent.com/rancher/local-path-provisioner/v0.0.29/deploy/local-path-storage.yaml sed -i 's#/opt/local-path-provisioner#/opt/k8s-local-path-storage#g' local-path-storage.yaml crictl pull dhub.kubesre.xyz/rancher/local-path-provisioner:v0.0.29 ctr -n k8s.io i tag dhub.kubesre.xyz/rancher/local-path-provisioner:v0.0.29 docker.io/rancher/local-path-provisioner:v0.0.29 k apply -f local-path-storage.yaml

7. 修改网络代理 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 vim /etc/containerd/config.toml [plugins."io.containerd.grpc.v1.cri" .registry.configs] [plugins."io.containerd.grpc.v1.cri" .registry.configs."docker.io" .tls] insecure_skip_verify = true [plugins."io.containerd.grpc.v1.cri" .registry.headers] [plugins."io.containerd.grpc.v1.cri" .registry.mirrors] [plugins."io.containerd.grpc.v1.cri" .registry.mirrors."docker.io" ] endpoint = ["https://docker.m.daocloud.io" ] [plugins."io.containerd.grpc.v1.cri" .registry.configs] [plugins."io.containerd.grpc.v1.cri" .registry.configs."registry.cn-shanghai.aliyuncs.com" .tls] insecure_skip_verify = true [plugins."io.containerd.grpc.v1.cri" .registry.configs."registry.cn-shanghai.aliyuncs.com" .auth] username = "Ethereal@1608148795872129" password = "SichaoMiaoA123456" [plugins."io.containerd.grpc.v1.cri" .registry.headers] [plugins."io.containerd.grpc.v1.cri" .registry.mirrors] [plugins."io.containerd.grpc.v1.cri" .registry.mirrors."registry.cn-shanghai.aliyuncs.com" ] endpoint = ["https://registry.cn-shanghai.aliyuncs.com" ] systemctl daemon-reload systemctl restart containerd systemctl status containerd

如果是公有部署,其实更推荐使用host方式(私有部署由于host不支持username与password字段因此不太推荐,也可以使用base64生成鉴权token,参考Private Registry auth config when using hosts.toml · containerd/containerd · Discussion #6468 )

国内主要是DaoCloud和南大镜像可用

DaoCloud/public-image-mirror: 很多镜像都在国外。比如 gcr 。国内下载很慢,需要加速。致力于提供连接全世界的稳定可靠安全的容器镜像服务。

Mirrors

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 # 创建/etc/containerd/certs.d目录 mkdir -p /etc/containerd/certs.d mkdir -p /etc/containerd/certs.d/docker.io mkdir -p /etc/containerd/certs.d/quay.io mkdir -p /etc/containerd/certs.d/registry.k8s.io # /etc/containerd/certs.d/docker.io/hosts.toml server = "https://docker.io" [host."https://docker.m.daocloud.io"] capabilities = ["pull", "resolve"] # /etc/containerd/certs.d/quay.io/hosts.toml server = "https://quay.io" [host."https://quay.m.daocloud.io"] capabilities = ["pull", "resolve"] # /etc/containerd/certs.d/registry.k8s.io/hosts.toml server = "https://registry.k8s.io" [host."https://k8s.m.daocloud.io"] capabilities = ["pull", "resolve"] # /etc/containerd/certs.d/ghcr.io/hosts.toml server = "https://ghcr.io" [host."https://ghcr.nju.edu.cn"] capabilities = ["pull", "resolve"] # /etc/containerd/certs.d/gcr.io/hosts.toml # config_path 值设置为 /etc/containerd/certs.d # /etc/containerd/config.toml [plugins."io.containerd.grpc.v1.cri".registry] config_path = "/etc/containerd/certs.d" [plugins."io.containerd.grpc.v1.cri".registry.auths] [plugins."io.containerd.grpc.v1.cri".registry.configs] [plugins."io.containerd.grpc.v1.cri".registry.headers] [plugins."io.containerd.grpc.v1.cri".registry.mirrors] [plugins."io.containerd.grpc.v1.cri".x509_key_pair_streaming] tls_cert_file = "" tls_key_file = ""

8. 部署Metrics 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 wget https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.7.2/components.yaml containers: - args: - --cert-dir=/tmp - --secure-port=443 - --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname - --kubelet-use-node-status-port - --metric-resolution=15s - --kubelet-insecure-tls k apply -f components.yaml

9. 部署完成(运行时文件,例如集群状态位于/var/lib/kubelet) 1 2 3 4 5 6 7 8 9 10 11 12 13 14 root@ubuntu-vm-2404:/home/ethereal/offline/offline# k get pods -A NAMESPACE NAME READY STATUS RESTARTS AGE kube-system cilium-envoy-9dwng 1/1 Running 0 11m kube-system cilium-jjwx2 1/1 Running 0 10m kube-system cilium-operator-5c7867ccd5-pdsb9 1/1 Running 0 11m kube-system coredns-855c4dd65d-95sfx 1/1 Running 0 11m kube-system coredns-855c4dd65d-h7lv8 1/1 Running 0 11m kube-system etcd-ubuntu-vm-2404 1/1 Running 0 11m kube-system kube-apiserver-ubuntu-vm-2404 1/1 Running 0 11m kube-system kube-controller-manager-ubuntu-vm-2404 1/1 Running 0 11m kube-system kube-proxy-p8bht 1/1 Running 0 11m kube-system kube-scheduler-ubuntu-vm-2404 1/1 Running 0 11m kube-system metrics-server-587b667b55-mkxw2 1/1 Running 0 22s local-path-storage local-path-provisioner-cdf8878d8-5nlbm 1/1 Running 0 5m43s

10. 离线下载脚本 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 # !/bin/bash # download all the dependencies for the project set -e # first into the script directory script_dir=$(cd "$(dirname "$0")" && pwd) # download runc echo -e "\e[94mPreparing runc... \e[39m" cd $script_dir rm -rf runc && mkdir runc && cd runc wget https://gh-proxy.com/github.com/opencontainers/runc/releases/download/v1.1.14/runc.amd64 # download cni echo -e "\e[94mPreparing cni... \e[39m" cd $script_dir rm -rf cni && mkdir cni && cd cni wget https://gh-proxy.com/github.com/containernetworking/plugins/releases/download/v1.5.1/cni-plugins-linux-amd64-v1.5.1.tgz # download containerd echo -e "\e[94mPreparing containerd... \e[39m" cd $script_dir rm -rf containerd && mkdir containerd && cd containerd wget https://gh-proxy.com/github.com/containerd/containerd/releases/download/v1.7.22/containerd-1.7.22-linux-amd64.tar.gz wget https://gh-proxy.com/raw.githubusercontent.com/containerd/containerd/refs/heads/main/containerd.service # download crictl echo -e "\e[94mPreparing crictl... \e[39m" cd $script_dir rm -rf crictl && mkdir crictl && cd crictl wget https://gh-proxy.com/github.com/kubernetes-sigs/cri-tools/releases/download/v1.31.1/crictl-v1.31.1-linux-amd64.tar.gz # download kubernetes echo -e "\e[94mPreparing kubernetes... \e[39m" cd $script_dir rm -rf k8s_debs && mkdir k8s_debs && cd k8s_debs apt download conntrack wget https://mirrors.aliyun.com/kubernetes-new/core/stable/v1.31/deb/amd64/cri-tools_1.31.1-1.1_amd64.deb wget https://mirrors.aliyun.com/kubernetes-new/core/stable/v1.31/deb/amd64/kubernetes-cni_1.5.1-1.1_amd64.deb wget https://mirrors.aliyun.com/kubernetes-new/core/stable/v1.31/deb/amd64/kubeadm_1.31.1-1.1_amd64.deb wget https://mirrors.aliyun.com/kubernetes-new/core/stable/v1.31/deb/amd64/kubectl_1.31.1-1.1_amd64.deb wget https://mirrors.aliyun.com/kubernetes-new/core/stable/v1.31/deb/amd64/kubelet_1.31.1-1.1_amd64.deb # download images echo -e "\e[94mPreparing images... \e[39m" cd $script_dir rm -rf images && mkdir images && cd images mkdir tmp && cd tmp tar zxvf $script_dir/containerd/containerd-1.7.22-linux-amd64.tar.gz -C . if command -v containerd > /dev/null; then echo -e "\e[94mcontainerd has been installed, skip \e[39m" else echo -e "\e[94mcontainerd has not been installed, installing... \e[39m" sudo apt install -y containerd fi images_list=( # k8s "registry.aliyuncs.com/google_containers/coredns:v1.11.3" "registry.aliyuncs.com/google_containers/coredns@sha256:6662e5928ea0c1471d663f224875fd824af6c772a1b959f8e7a5eef1dacad898" "registry.aliyuncs.com/google_containers/etcd:3.5.15-0" "registry.aliyuncs.com/google_containers/etcd@sha256:d0e1bc44b9bc37d0b63612e1a11b43e07bc650ffc0545d58f7991607460974d4" "registry.aliyuncs.com/google_containers/kube-apiserver:v1.31.1" "registry.aliyuncs.com/google_containers/kube-apiserver@sha256:fdd7b615e9b5b972188de0a27ec832b968d5d8c7db71b0159a23a5cd424e7369" "registry.aliyuncs.com/google_containers/kube-controller-manager:v1.31.1" "registry.aliyuncs.com/google_containers/kube-controller-manager@sha256:c81d05628171c46d5d81f08db3d39a9c5ce13cf60b7e990215aa1191df5d7f35" "registry.aliyuncs.com/google_containers/kube-proxy:v1.31.1" "registry.aliyuncs.com/google_containers/kube-proxy@sha256:4331680d68b52d6db6556e2d26625106e64ace163411d79535e712b24ed367b3" "registry.aliyuncs.com/google_containers/kube-scheduler:v1.31.1" "registry.aliyuncs.com/google_containers/kube-scheduler@sha256:c9593d1f60ef43c872bcd4d43ac399d0d40e41d8f011fd71989b96caeddc0f6d" "registry.aliyuncs.com/google_containers/pause:3.10" "registry.aliyuncs.com/google_containers/pause@sha256:0ca1162b75bf9fc55c4cac99a1ff06f7095c881d5c07acfa07c853e72225c36f" "registry.k8s.io/pause:3.8" "registry.k8s.io/metrics-server/metrics-server:v0.7.2" "registry.k8s.io/metrics-server/metrics-server@sha256:ffcb2bf004d6aa0a17d90e0247cf94f2865c8901dcab4427034c341951c239f9" # cilium "quay.io/cilium/cilium-envoy@sha256:bd5ff8c66716080028f414ec1cb4f7dc66f40d2fb5a009fff187f4a9b90b566b" "quay.io/cilium/cilium@sha256:0b4a3ab41a4760d86b7fc945b8783747ba27f29dac30dd434d94f2c9e3679f39" "quay.io/cilium/operator-generic@sha256:3bc7e7a43bc4a4d8989cb7936c5d96675dd2d02c306adf925ce0a7c35aa27dc4" # csi # "gcr.io/k8s-staging-sig-storage/csi-resizer:canary" # "quay.io/k8scsi/mock-driver:canary" # "quay.io/k8scsi/mock-driver@sha256:d4ed892c6a4a85cac2c29360754ab3bdbaa6fce6efde0ebb9db18bef5e73a5ed" "docker.io/rancher/local-path-provisioner:v0.0.29" # kubevirt "quay.io/kubevirt/cdi-apiserver:v1.60.3" "quay.io/kubevirt/cdi-apiserver@sha256:9f5e76ecc8135681a16d496dcb72051a73ee58041ae4ebccd69ab657e4794add" "quay.io/kubevirt/cdi-controller:v1.60.3" "quay.io/kubevirt/cdi-controller@sha256:49571590f136848f9b6c0ed84ff9e2a3fe15a1b34194bec698074038fe5f5973" "quay.io/kubevirt/cdi-importer:v1.60.3" "quay.io/kubevirt/cdi-importer@sha256:14de12bf71e7050d5abaf96176da8f09f3d689a1e6f44958b17216dc6bf8a9a7" "quay.io/kubevirt/cdi-operator:v1.60.3" "quay.io/kubevirt/cdi-operator@sha256:86569fd9d601a96a9d8901ccafd57e34b0139999601ffc1ceb5b00574212923d" "quay.io/kubevirt/cdi-uploadproxy:v1.60.3" "quay.io/kubevirt/cdi-uploadproxy@sha256:09d24a2abc9006d95fc9d445e90d737df3c2345ce9b472f3d2376c6800b17e26" "quay.io/kubevirt/virt-api:v1.3.1" "quay.io/kubevirt/virt-api@sha256:2c63b197119f0d503320d142a31a421d8e186ef2c3c75aa7b262388cb4ef5d99" "quay.io/kubevirt/virt-controller:v1.3.1" "quay.io/kubevirt/virt-controller@sha256:1094eb2b1bc07eb6fa33afcab04e03ff8a4f25760b630d360d1e29e2b4615498" "quay.io/kubevirt/virt-handler:v1.3.1" "quay.io/kubevirt/virt-handler@sha256:c441320477f5a4e80035f1e9997f2fd09db8eab4943f42c685d2c664ba77b851" "quay.io/kubevirt/virt-launcher:v1.3.1" "quay.io/kubevirt/virt-launcher@sha256:b15f8049d7f1689d9d8c338d255dc36b15655fd487e824b35e2b139258d44209" "quay.io/kubevirt/virt-operator:v1.3.1" "quay.io/kubevirt/virt-operator@sha256:9eb46469574941ad21833ea00a518e97c0beb0c80e0d73a91973021d0c1e5c7a" ) images_list="${images_list[*]}" for img in $images_list; do echo -e "\e[94m -> Preparing $img... \e[39m" ./bin/ctr -n k8s.io images pull --platform linux/amd64 $img --hosts-dir $script_dir done eval "./bin/ctr -n k8s.io images export --platform linux/amd64 ../containerd_images.tar ${images_list}" cd .. rm -rf tmp # download cilium echo -e "\e[94mPreparing cilium... \e[39m" cd $script_dir rm -rf cilium && mkdir cilium && cd cilium wget https://gh-proxy.com/github.com/cilium/cilium-cli/releases/download/v0.16.18/cilium-linux-amd64.tar.gz # download local-path-provisioner echo -e "\e[94mPreparing local-path-provisioner... \e[39m" cd $script_dir rm -rf local-path-provisioner && mkdir local-path-provisioner && cd local-path-provisioner wget https://gh-proxy.com/raw.githubusercontent.com/rancher/local-path-provisioner/v0.0.29/deploy/local-path-storage.yaml # download metrics echo -e "\e[94mPreparing metrics... \e[39m" cd $script_dir rm -rf metrics && mkdir metrics && cd metrics wget https://gh-proxy.com/github.com/kubernetes-sigs/metrics-server/releases/download/v0.7.2/components.yaml # download kubevirt echo -e "\e[94mPreparing kubevirt... \e[39m" cd $script_dir rm -rf kubevirt && mkdir kubevirt && cd kubevirt sudo apt update apt download acl adwaita-icon-theme alsa-topology-conf alsa-ucm-conf at-spi2-common at-spi2-core bridge-utils cpu-checker dconf-gsettings-backend dconf-service dns-root-data dnsmasq-base fontconfig gir1.2-atk-1.0 \ gir1.2-ayatanaappindicator3-0.1 gir1.2-freedesktop gir1.2-gdkpixbuf-2.0 gir1.2-gstreamer-1.0 gir1.2-gtk-3.0 gir1.2-gtk-vnc-2.0 gir1.2-gtksource-4 gir1.2-harfbuzz-0.0 gir1.2-libosinfo-1.0 \ gir1.2-libvirt-glib-1.0 gir1.2-pango-1.0 gir1.2-spiceclientglib-2.0 gir1.2-spiceclientgtk-3.0 gir1.2-vte-2.91 glib-networking glib-networking-common glib-networking-services gsettings-desktop-schemas \ gstreamer1.0-plugins-base gstreamer1.0-plugins-good gstreamer1.0-x gtk-update-icon-cache hicolor-icon-theme humanity-icon-theme i965-va-driver intel-media-va-driver ipxe-qemu ipxe-qemu-256k-compat-efi-roms \ libaa1 libasound2-data libasound2t64 libasyncns0 libatk-bridge2.0-0t64 libatk1.0-0t64 libatspi2.0-0t64 libavahi-client3 libavahi-common-data libavahi-common3 libavc1394-0 libayatana-appindicator3-1 \ libayatana-ido3-0.4-0 libayatana-indicator3-7 libboost-iostreams1.83.0 libboost-thread1.83.0 libbrlapi0.8 libburn4t64 libcaca0 libcacard0 libcairo-gobject2 libcairo2 libcdparanoia0 libcolord2 libcups2t64 \ libdatrie1 libdaxctl1 libdbusmenu-glib4 libdbusmenu-gtk3-4 libdconf1 libdecor-0-0 libdecor-0-plugin-1-gtk libdrm-amdgpu1 libdrm-intel1 libdrm-nouveau2 libdrm-radeon1 libdv4t64 libepoxy0 libfdt1 \ libflac12t64 libgbm1 libgdk-pixbuf-2.0-0 libgdk-pixbuf2.0-bin libgdk-pixbuf2.0-common libgl1 libgl1-amber-dri libgl1-mesa-dri libglapi-mesa libglvnd0 libglx-mesa0 libglx0 libgraphite2-3 \ libgstreamer-plugins-base1.0-0 libgstreamer-plugins-good1.0-0 libgtk-3-0t64 libgtk-3-bin libgtk-3-common libgtk-vnc-2.0-0 libgtksourceview-4-0 libgtksourceview-4-common libgvnc-1.0-0 libharfbuzz-gobject0 \ libharfbuzz0b libiec61883-0 libigdgmm12 libiscsi7 libisoburn1t64 libisofs6t64 libjack-jackd2-0 liblcms2-2 libllvm19 libmp3lame0 libmpg123-0t64 libndctl6 libnfs14 libnss-mymachines libogg0 libopus0 \ liborc-0.4-0t64 libosinfo-1.0-0 libosinfo-l10n libpango-1.0-0 libpangocairo-1.0-0 libpangoft2-1.0-0 libpangoxft-1.0-0 libpciaccess0 libpcsclite1 libphodav-3.0-0 libphodav-3.0-common libpipewire-0.3-0t64 \ libpipewire-0.3-common libpixman-1-0 libpmem1 libpmemobj1 libproxy1v5 libpulse0 librados2 libraw1394-11 librbd1 librdmacm1t64 librsvg2-2 librsvg2-common libsamplerate0 libsdl2-2.0-0 libshout3 libslirp0 \ libsndfile1 libsoup-3.0-0 libsoup-3.0-common libspa-0.2-modules libspeex1 libspice-client-glib-2.0-8 libspice-client-gtk-3.0-5 libspice-server1 libtag1v5 libtag1v5-vanilla libthai-data libthai0 libtheora0 \ libtpms0 libtwolame0 liburing2 libusbredirhost1t64 libusbredirparser1t64 libv4l-0t64 libv4lconvert0t64 libva-x11-2 libva2 libvirglrenderer1 libvirt-clients libvirt-daemon libvirt-daemon-config-network \ libvirt-daemon-config-nwfilter libvirt-daemon-driver-qemu libvirt-daemon-system libvirt-daemon-system-systemd libvirt-glib-1.0-0 libvirt-glib-1.0-data libvirt-l10n libvirt0 libvisual-0.4-0 libvorbis0a \ libvorbisenc2 libvpx9 libvte-2.91-0 libvte-2.91-common libvulkan1 libwavpack1 libwayland-client0 libwayland-cursor0 libwayland-egl1 libwayland-server0 libwebrtc-audio-processing1 libx11-xcb1 libxcb-dri2-0 \ libxcb-dri3-0 libxcb-glx0 libxcb-present0 libxcb-randr0 libxcb-render0 libxcb-shm0 libxcb-sync1 libxcb-xfixes0 libxcomposite1 libxcursor1 libxdamage1 libxfixes3 libxft2 libxi6 libxinerama1 libxml2-utils \ libxrandr2 libxrender1 libxshmfence1 libxss1 libxtst6 libxv1 libxxf86vm1 libyajl2 mdevctl mesa-libgallium mesa-va-drivers mesa-vulkan-drivers msr-tools osinfo-db ovmf python3-cairo python3-gi-cairo \ python3-libvirt python3-libxml2 qemu-block-extra qemu-system-common qemu-system-data qemu-system-gui qemu-system-modules-opengl qemu-system-modules-spice qemu-system-x86 qemu-utils seabios \ session-migration spice-client-glib-usb-acl-helper swtpm swtpm-tools systemd-container ubuntu-mono va-driver-all virt-manager virt-viewer virtinst x11-common xorriso export RELEASE=v1.3.1 wget https://gh-proxy.com/github.com/kubevirt/kubevirt/releases/download/${RELEASE}/kubevirt-operator.yaml wget https://gh-proxy.com/github.com/kubevirt/kubevirt/releases/download/${RELEASE}/kubevirt-cr.yaml wget https://gh-proxy.com/github.com/kubevirt/kubevirt/releases/download/${RELEASE}/virtctl-${RELEASE}-linux-amd64 export RELEASE=v1.60.3 wget https://gh-proxy.com/github.com/kubevirt/containerized-data-importer/releases/download/$RELEASE/cdi-operator.yaml wget https://gh-proxy.com/github.com/kubevirt/containerized-data-importer/releases/download/$RELEASE/cdi-cr.yaml # download helm echo -e "\e[94mPreparing helm... \e[39m" cd $script_dir rm -rf helm && mkdir helm && cd helm wget https://get.helm.sh/helm-v3.17.1-linux-amd64.tar.gz echo -e "\e[92mAll dependencies have been downloaded successfully! \e[39m"_dir rm -rf helm && mkdir helm && cd helm wget https://get.helm.sh/helm-v3.17.1-linux-amd64.tar.gz echo -e "\e[92mAll dependencies have been downloaded successfully! \e[39m"

11. 证书 11.1 检查证书是否过期 1 kubeadm certs check-expiration

11.2 备份原文件 1 2 3 4 5 6 7 8 mkdir ~/confirm #备份源集群文件 cp -rf /etc/kubernetes/ ~/confirm/ ll ~/confirm/kubernetes/ mkdir confirm/data_etcd #备份ETCD cp -rf /var/lib/etcd/* ~/confirm/data_etcd cp /usr/bin/kubeadm /usr/bin/kubeadm.bak

11.3 更新证书 1 2 3 4 5 6 7 8 9 10 11 12 kubeadm certs renew all # 更新除kubelet.conf之外的所有证书 vim /etc/kubernetes/admin.conf # 将user以及下面的经过base64 编码的值 (17行开始)拷贝至 kubelet.conf # 将nodes节点上的/etc/kubernetes/kubelet.conf 替换成master节点上的 kubelet.conf # 之后重启kubelet服务。 systemctl restart docker.service && systemctl restart kubelet.service

11.4 kubelet证书自动续签 11.4.1 配置kube-controller-manager组件(master节点) 1 2 3 4 5 6 7 vim /etc/kubernetes/manifests/kube-controller-manager.yaml # 在container的command 处添加参数 - --experimental-cluster-signing-duration=87600h0m0s # kubelet客户端证书颁发有效期设置为10年 - --feature-gates=RotateKubeletServerCertificate=true # 启用server证书颁发

11.4.2 在所有control-plane上配置 11.4.3 node节点更新 1 2 vim /var/lib/kubelet/config.yaml # 确保启用rotateCertificates: true

11.4.4 重启 1 kubectl delete pod kube-controller-manager-k8s-master1 -n kube-system

11.5 使用开源组件更新(需要重编译k8s) https://studygolang.com/dl/golang/go1.16.5.linux-amd64.tar.gz

https://codeload.github.com/kubernetes/kubernetes/zip/refs/tags/v1.22.0

12. dashboard 12.1 准备离线helm部署文件 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 wget https://github.com/kubernetes/dashboard/releases/download/kubernetes-dashboard-7.10.5/kubernetes-dashboard-7.10.5.tgz tar xvf kubernetes-dashboard-7.10.5.tgz vim values.yaml # 替换kong中为如下配置(修改porxy.type和proxy.http.enabled) kong: enabled: true # env: dns_order: LAST,A,CNAME,AAAA,SRV plugins: 'off' nginx_worker_processes: 1 ingressController: enabled: false manager: enabled: false dblessConfig: configMap: kong-dbless-config proxy: type: NodePort http: enabled: true

镜像列表:

1 2 3 4 docker.io/kubernetesui/dashboard-api:1.10.3 docker.io/kubernetesui/dashboard-auth:1.2.3 docker.io/kubernetesui/dashboard-metrics-scraper:1.2.2 docker.io/kubernetesui/dashboard-web:1.6.2

使用以下命令打包离线镜像文件:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 # !/bin/bash set -e # first into the script directory script_dir=$(c "$(dirname "$0")" && pwd) cd $script_dir rm -rf images && mkdir images && cd images images_list=( # k8s "docker.io/kubernetesui/dashboard-api:1.10.3" "docker.io/kubernetesui/dashboard-auth:1.2.3" "docker.io/kubernetesui/dashboard-metrics-scraper:1.2.2" "docker.io/kubernetesui/dashboard-web:1.6.2" "docker.io/library/kong:3.8" ) images_list="${images_list[*]}" for img in $images_list; do echo -e "\e[94m -> Preparing $img... \e[39m" ./bin/ctr -n k8s.io images pull --platform linux/amd64 $img --hosts-dir $script_dir done eval "ctr -n k8s.io images export --platform linux/amd64 ../containerd_images.tar ${images_list}"

12.2 部署 1 helm install k8s-dashboard /disk2/shared/build_offline_origin/dashboard/kubernetes-dashboard -n kubernetes-dashboard

12.3 创建账户 1 2 3 4 5 6 kubectl create serviceaccount dashboard -n kubernetes-dashboard kubectl create rolebinding def-ns-admin --clusterrole=admin --serviceaccount=default:def-ns-admin kubectl create clusterrolebinding dashboard-cluster-admin --clusterrole=cluster-admin --serviceaccount=kubernetes-dashboard:dashboard # 创建临时token kubectl -n kubernetes-dashboard create token dashboard # eyJhbGciOiJSUzI1NiIsImtpZCI6IjE1dWJXQmNUdnV3MWtGY1BXbEs4OEY3NkFTeHJteW81ME1ORlVYVHpDY2cifQ.eyJhdWQiOlsiaHR0cHM6Ly9rdWJlcm5ldGVzLmRlZmF1bHQuc3ZjLmNsdXN0ZXIubG9jYWwiXSwiZXhwIjoxNzQwNTc0OTk4LCJpYXQiOjE3NDA1NzEzOTgsImlzcyI6Imh0dHBzOi8va3ViZXJuZXRlcy5kZWZhdWx0LnN2Yy5jbHVzdGVyLmxvY2FsIiwianRpIjoiNDIzMmJmMmItODMzMi00Mzk5LWE1NDctMDEwYzk4NTUxNzBkIiwia3ViZXJuZXRlcy5pbyI6eyJuYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsInNlcnZpY2VhY2NvdW50Ijp7Im5hbWUiOiJkYXNoYm9hcmQiLCJ1aWQiOiIxNDQ0NTM3Yy1jMGQzLTQyMzMtYWNmOS0wNWM5NWJjODYxMDEifX0sIm5iZiI6MTc0MDU3MTM5OCwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmVybmV0ZXMtZGFzaGJvYXJkOmRhc2hib2FyZCJ9.X4jRDfCbLv5rJSW7Odmh52C7-8uLDIzqLzYIVoYS1tvQnSTXGHeexWu6fhgfCKI85KLKgRJDqIhj_O-kqNctcgUH60WqhorI8xe5x_ih26tn3IvuWh_cmyYinACz5jLxg8YORdZoetuJwJQab3Rs94rwrfJKpgrCNn1pkR_Dez2g7FMBHq89zMTB8PZp_fhXAfazs0UulQAKiPiCQsxh8eIlSNpQw7bIs8jaXEMqimfbC61ULuVE84IgkWT84pqQi6Dl9gMad5-i14WVzdmDlRZa-LO5LtRx1MQmQXrDmKhJm5oDeBV_55oQXytwT4KFLWEIfwUSlrV8DO0drFq20A

创建正式token

1 2 3 4 5 6 7 8 apiVersion: v1 kind: Secret metadata: name: admin-user namespace: kubernetes-dashboard annotations: kubernetes.io/service-account.name: "dashboard" type: kubernetes.io/service-account-token

1 2 3 4 k apply -f token.yaml kubectl get secret admin-user -n kubernetes-dashboard -o jsonpath={".data.token"} | base64 -d # eyJhbGciOiJSUzI1NiIsImtpZCI6IjE1dWJXQmNUdnV3MWtGY1BXbEs4OEY3NkFTeHJteW81ME1ORlVYVHpDY2cifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjE0NDQ1MzdjLWMwZDMtNDIzMy1hY2Y5LTA1Yzk1YmM4NjEwMSIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlcm5ldGVzLWRhc2hib2FyZDpkYXNoYm9hcmQifQ.PiYogpKwVVYDUniVsbeBbiHD-JL2To2GC2_8iq1oHtsYW1FqDWOtx5wkyV7GRSyC1k_xSCs-E1LwQqGNKJjJW6yGcemE3J5H1RSXMvaOm0Cy28sKqfvOuA8apHV8Pbo0T50yvh5XUZVb57KcMTLCR0JeXFuc5BIXw43Of8_D2tHKzsCnOQNiWizk_C6hLvfSPGX9pl7bXOZ9VS_mUtnPDVpV_RJd4mtRWZ8dMZyRXL2M12I4FfV-UQcr55sUhNaPep7BORmetSFX2IUuq_enZB7VnpWrXuWRTZABUXhMNWVx8qVF2KPqBr4WO4NCfWEwPJA20vRokVyct5HX2OLCVA

12.4 访问 1 2 k get svc -A # kubernetes-dashboard k8s-dashboard-kong-proxy NodePort 10.96.244.145 <none> 80:32705/TCP,443:30585/TCP 8m49s

访问任意节点IP+30585端口,使用刚刚的token登录即可。

13. prometheus + grafana 13.1 准备离线helm部署文件 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 wget https://github.com/prometheus-community/helm-charts/releases/download/prometheus-27.5.0/prometheus-27.5.0.tgz wget https://github.com/grafana/helm-charts/releases/download/grafana-8.10.1/grafana-8.10.1.tgz # wget https://github.com/prometheus-community/helm-charts/releases/download/alertmanager-1.26.0/alertmanager-1.26.0.tgz tar xvf prometheus-27.5.0.tgz tar xvf grafana-8.10.1.tgz # tar xvf alertmanager-1.26.0.tgz vim prometheus/values.yaml # 替换server的ClusterIP为NodePort,开启statefulset,开启persistence并设置sc为Local-path,设置node server: service: loadBalancerIP: "" loadBalancerSourceRanges: [] servicePort: 80 sessionAffinity: None type: NodePort statefulSet: ## If true, use a statefulset instead of a deployment for pod management. ## This allows to scale replicas to more than 1 pod ## enabled: true persistence: storageClass: "local-path" nodeSelector: node-role.kubernetes.io/control-plane: "" # 部署在control-plane上,要事先去除control-plane的taint vim prometheus/charts/alertmanager/values.yaml # 更改persistence sc为local-path persistence: storageClass: "local-path" service: annotations: {} labels: {} type: NodePort vim grafana/values.yaml # 开启NodePort service: enabled: true type: NodePort

13.2 部署 helm install prometheus /disk2/shared/build_offline_origin/prometheus/prometheus -n prometheus

helm install grafana /disk2/shared/build_offline_origin/grafana/grafana -n grafana

kubectl get secret --namespace grafana grafana -o jsonpath="{.data.admin-password}" | base64 --decode ; echo # 获取登录密码,用户名为admin

13.3 使用python访问 python抓取Prometheus的数据(使用prometheus-api-client库) - 南风丶轻语 - 博客园

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 import jsonfrom datetime import timedeltafrom prometheus_api_client import PrometheusConnect, MetricSnapshotDataFrame, MetricRangeDataFrame, Metric, MetricsListfrom prometheus_api_client.utils import parse_datetimeprom = PrometheusConnect(url="http://172.17.140.17:9090" , headers=None , disable_ssl=True ) ok = prom.check_prometheus_connection() print (f"连接Prometheus:{prom.url} , 状态:{'连接成功' if ok else '连接失败' } " )""" url - (str) url for the prometheus host headers – (dict) A dictionary of http headers to be used to communicate with the host. Example: {“Authorization”: “bearer my_oauth_token_to_the_host”} disable_ssl – (bool) If set to True, will disable ssl certificate verification for the http requests made to the prometheus host url Prometheus的连接url 如果Prometheus需要认证, 则需要在headers中添加认证 {“Authorization”: “bearer my_oauth_token_to_the_host”} disable_ssl 是否禁止ssl认证 http就禁止 https 则需要验证 """ all_metrics = prom.all_metrics() print ("------所有指标------" )for metrics in all_metrics: print (metrics) print ('------------------' )label_name = "end_time" params = None result = prom.get_label_values(label_name, params) print (f"-------------------- 抓取的标签:{label_name} 参数:{params} --------------------" )print (f"个数:{len (result)} " )for r in result: print (r) """ 调用的接口是 /api/v1/label/end_time/values """ query = "up" label_config = {"user" : "dev" } values = prom.get_current_metric_value(query, label_config=label_config) """ 调用的接口是 /api/v1/query """ for v in values: print (v) print ('-' * 20 )query = "up{user='dev'}" values = prom.custom_query(query) """ 调用的接口是 /api/v1/query """ for v in values: print (v) query = 'up{instance=~"172.16.90.22:9100"}' start_time = parse_datetime("2d" ) end_time = parse_datetime("now" ) chunk_size = timedelta(days=2 ) result = prom.get_metric_range_data(query, None , start_time, end_time, chunk_size) """ 调用的接口是 /api/v1/query_range """ print (f'result:{result} ' )with open ('a.json' , 'w' ) as f: json.dump(result, f) query = "up" label_config = {"user" : "dev" } metric_data = prom.get_current_metric_value(query, label_config=label_config) metric_object_list: list [Metric] = MetricsList(metric_data) for item in metric_object_list: print (f"指标名称:{item.metric_name} 标签:{item.label_config} " ) query = "up" label_config = {"user" : "dev" } metric_data = prom.get_current_metric_value(query, label_config=label_config) frame = MetricSnapshotDataFrame(metric_data) head = frame.head() print (head)print ("-" * 20 )query = 'up{instance=~"172.16.90.22:9100"}' start_time = parse_datetime("2d" ) end_time = parse_datetime("now" ) chunk_size = timedelta(days=2 ) result = prom.get_metric_range_data(query, None , start_time, end_time, chunk_size) frame2 = MetricRangeDataFrame(result) print (frame2.head())

13.4 暴露metrics [1228]Python prometheus-client使用方式-腾讯云开发者社区-腾讯云

需要自己写接口暴露,返回值遵循Gauge规范,需要返回纯文本,而不是json。

1 2 3 4 # 在你的应用程序的 /metrics 路径上 # 1 表示健康,0 表示不健康 app_liveness_status 1 app_readiness_status 1

13.5 alertmanager配置 首先修改prometheus/charts/alertmanager/values.yaml,添加如下配置:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 config: global: resolve_timeout: 5m smtp_hello: 'prometheus' smtp_from: 'xxx@qq.com' smtp_smarthost: 'smtp.qq.com:465' smtp_auth_username: 'xxx@qq.com' smtp_auth_password: 'xxxxxxxxxxxxxxxx' smtp_require_tls: false templates: - '/etc/config/*.tmpl' receivers: - name: email email_configs: - to: 'x@qq.com' headers: {"subject" :'{{ template "email.header" . }} '} html: ' {{ template "email.html" . }}' send_resolved: true # 发送报警解除邮件 - name: ' sms-receiver' webhook_configs: - url: 'http://your-custom-webhook-service:8080/sms-api' send_resolved: true route: group_wait: 5s group_interval: 5s receiver: email repeat_interval: 5m inhibit_rules: - source_match: severity: 'critical' target_match: severity: 'warning' equal: ['alertname' ] templates: template_email.tmpl: |- {{ define "email.header" }} {{ if eq .Status "firing" }}[Warning ]: {{ range .Alerts }}{{ .Annotations.summary }} {{ end }}{{ end }} {{ if eq .Status "resolved" }}[Resolved ]: {{ range .Alerts }}{{ .Annotations.resolve_summary }} {{ end }}{{ end }} {{ end }} {{ define "email.html" }} {{ if gt (len .Alerts.Firing) 0 - }} <font color="#FF0000"><h3> [Warning ]:</h3></font> {{ range .Alerts }} 告警级别:{{ .Labels.severity }} <br> 告警类型:{{ .Labels.alertname }} <br> 故障主机: {{ .Labels.instance }} <br> 告警主题: {{ .Annotations.summary }} <br> 告警详情: {{ .Annotations.description }} <br> 触发时间: {{ (.StartsAt.Add 28800e9 ).Format "2006-01-02 15:04:05" }} <br> {{- end }} {{- end }} {{ if gt (len .Alerts.Resolved) 0 - }} <font color="#66CDAA"><h3> [Resolved ]:</h3></font> {{ range .Alerts }} 告警级别:{{ .Labels.severity }} <br> 告警类型:{{ .Labels.alertname }} <br> 故障主机: {{ .Labels.instance }} <br> 告警主题: {{ .Annotations.resolve_summary }} <br> 告警详情: {{ .Annotations.resolve_description }} <br> 触发时间: {{ (.StartsAt.Add 28800e9 ).Format "2006-01-02 15:04:05" }} <br> 恢复时间: {{ (.EndsAt.Add 28800e9 ).Format "2006-01-02 15:04:05" }} <br> {{- end }} {{- end }} {{- end }}

然后修改prometheus/values.yaml,添加如下配置:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 serverFiles: prometheus.yml: rule_files: - /etc/config/recording_rules.yml - /etc/config/alerting_rules.yml - /etc/config/rules - /etc/config/alerts scrape_configs: - job_name: prometheus static_configs: - targets: - localhost:9090 - job_name: 'my-app' kubernetes_sd_configs: - role: pod relabel_configs: - source_labels: [__meta_kubernetes_pod_label_app ] regex: my-app-label action: keep alerting_rules.yml: groups: - name: 物理节点状态-监控告警 rules: - alert: Node-up expr: up {component="node-exporter" } == 0 for: 2s labels: severity: critical annotations: summary: "服务器{{ $labels.kubernetes_node }} 已停止运行!" description: "检测到服务器{{ $labels.kubernetes_node }} 已异常停止,IP: {{ $labels.instance }} ,请排查!" resolve_summary: "服务器{{ $labels.kubernetes_node }} 已恢复运行!" resolve_description: "服务器{{ $labels.kubernetes_node }} 已恢复运行,IP: {{ $labels.instance }} 。" - name: my-app-health-alerts rules: - alert: MyappLivenessDown expr: app_liveness_status == 0 for: 1m labels: severity: critical annotations: summary: "My app liveness check failed" description: "My app's liveness probe has failed for over 1 minute."

更新并重启

1 2 3 4 5 6 7 helm upgrade prometheus -n prometheus . # 查询alertmanger的配置文件是否更新(server同理): kubectl logs -f -n prometheus prometheus-alertmanager-7757db759b-9nq9c prometheus-alertmanager # 查询server的configmap(alertmanger同理) kubectl get configmap -n prometheus kubectl describe configmaps -n prometheus prometheus-server # 在alert控制台查看告警规则是否生效,在prometheus和alertmanager控制台中查看

14. 更新配置文件 1 2 3 4 5 # 修改yaml配置文件路径/etc/kubernetes/manifests/kube-apiserver.yaml vim /etc/kubernetes/manifests/kube-apiserver.yaml # 当修改完成后自动重启pod # 其余配置路径/etc/kubernetes/controller-manager.conf vim /etc/kubernetes/controller-manager.conf

15. 架构 15.1 端到端流程 15.1.1 deployment

用户编写某个对象的yaml文件并通过kubectl提交,例如deployment

kubectl访问api-server,api-server将数据写入etcd

可选:kube-controller-manager根据用户提交的类型创建新的资源

对于deployment,需要创建replicaset,通过api-server写入新的replicaset

对于新的replicaset,需要创建pod,通过api-server写入新的pod

kube-scheduler监听到pod已经创建,进入调度阶段,调度pod到一个节点上,写入api-server,更新pod位置信息

kubelet创建pod

监听到有pod需要在当前节点上创建

kubelet 调用 容器运行时 (如 containerd)创建 Pod 的沙盒容器(pause 容器)

向cni申请创建一个ip(此时由cni负责构建网络)

向api-server更新ip信息

15.1.2 service

用户编写某个对象的yaml文件并通过kubectl提交

kubectl访问api-server,api-server将数据写入etcd

controller-manager监听到新的service创建,通过Endpoints Controller选择合适的pod,写入api-server

kube-proxy或cilium监听endpoints更新,在每个节点上更新路径

通过iptables、ipvs方式或ebpf方式(cilium)

DNS服务监听service更新,并写入对应的DNS表

15.2 cilium架构 15.2.1 cilium-operator

用于创建cilium的CRD资源,例如ip池

包含ipam,用于ip分配

用于与外部数据库同步数据

15.2.2 cilium-envoy

负责L7级别的功能,例如service的负载均衡策略

15.2.3 cilium

负责底层的转发,通过ebpf构建clusterIP到pod的路径

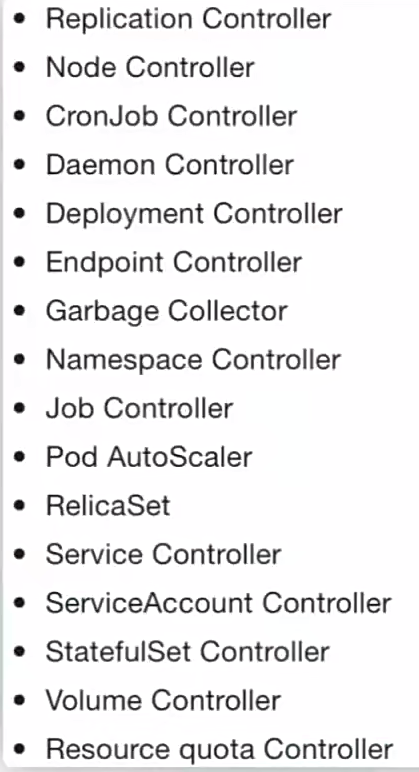

15.3 kube-controller-manager组成 路径位于kubernetes/cmd/kube-controller-manager/names/controller_names.go at master · kubernetes/kubernetes

replication controller: (已经废弃

node controller: 监听并更新node的相关信息

cronjob controller: 管理cronJob

daemonset controller: 监听daemonset创建并更新pod

deployment controller: 监听deployment创建并更新replicaset

endpoint controller: 更新service对应的pod列表

grabage controller: 监听资源对象事件,更新对象之间的依赖关系,并根据对象的删除策略来决定是否删除其关联对象。

namespace controller: 管理namespace的信息以及配置

job controller: 负责管理job,类似cronjob controller

pod autoscaler: 负责管理自动扩展,允许根据资源情况更新deployment或statefulset等对象当前的replicaset创建的pod数目

replicaset: 保证replicaset中pod数目保持预期

service controller: 检查service与外部的配置,例如LoadBalancer方式创建的service需要与外部loadbalancer云服务进行通信

serviceaccounttoken controller: 监听Service Account和Namespace的事件,如果在一个Namespace中没有default Service Account,那么Service Account会给Namespace创建一个默认(default)的Service Account。管理用户token

statefulset controller: 监听statefulset创建并更新replicaset

volume controller: (已经废弃)

PV controller: 监听pvc创建,寻找合适pv绑定,或者调用csi创建pv(in-tree和out-tree方式);负责pv挂载与卸载到对应pod

resource quota controller: 阻止超限创建,例如当申请的pod需要的内存加上现在已经申请的内存超过最大内存时,拒绝创建

distruption controller: 在更新等场景时保证Pod最低运行数目

certificate… controller: 管理证书

ttl controller: 设置资源可以存在时间,到期自动删除

ttl after finished controller: 到期资源删除后操作管理

root ca certificate publisher controller: 对kube-root-ca.crt的configmap进行管理

KubeAPIServerClusterTrustBundlePublisherController: 也是证书管理

SELinuxWarningController: 识别对于不同selinux场景下使用相同资源,给予警告

bootstrapsigner controller: 启动集群或加入集群控制器

taint eviction controller: 设定taint

clusterRole aggregation controller: 集群权限控制管理器

15.4 参考 apiserver、kube-scheduler、kube-controller-manager原理分析_kube-api kube-controller kube-scheduler-CSDN博客

Controller-Manager 和 Scheduler | 深入架构原理与实践

k8s中Controller-Manager和Scheduler的选主逻辑 - JL_Zhou - 博客园

kube-controller-manager | Kubernetes

Cilium完全替换kube-proxy - 知乎

程序员 - Cilium CNI深度指南 - 个人文章 - SegmentFault 思否

Cilium Operator — Cilium 1.17.2 documentation

Kubernetes 中 Cilium 网络架构详解与流量处理流程1. Cilium 和 Cilium-Operator - 掘金

Cilium 系列-3-Cilium 的基本组件和重要概念 - 知乎

Cilium 如何处理 L7 流量 - 知乎

Go Extension - Cilium学习笔记

使用 Envoy 作为 Cilium 的 L7 代理 - Jimmy Song

ReplicationController | Kubernetes

深入分析Kubernetes DaemonSet Controller-腾讯云开发者社区-腾讯云

k8s之ServiceAccount - 上古伪神 - 博客园

4.3 使用ReplicaSet而不是ReplicationController - 知乎

Pod 水平自动扩缩 | Kubernetes

Kubernetes Job Controller 原理和源码分析(一) - 胡说云原生 - 博客园

Garbage Controller - 简书

kubernetes垃圾回收器GarbageCollector Controller源码分析(一)-腾讯云开发者社区-腾讯云

深入分析Kubernetes DaemonSet Controller-腾讯云开发者社区-腾讯云

CronJob | Kubernetes

k8s 组件介绍-kube-controller-manager - 疯狂的米粒儿 - 博客园

Kubernetes Controller 实现机制深度解析-腾讯云开发者社区-腾讯云

CronJob | Kubernetes

Cilium系列-6-从地址伪装从IPtables切换为eBPF_cliium ipv4nativeroutingcidr-CSDN博客

Iptables Usage — Cilium 1.17.2 documentation

k8s endpoints controller源码分析 - 良凯尔 - 博客园

Code optimize on kubernetes/cmd/kube-controller-manager/app/controllermanager.go · Issue #115995 · kubernetes/kubernetes

kubernetes/cmd/kube-controller-manager/names/controller_names.go at master · kubernetes/kubernetes

kube-controller-manager源码分析-PV controller分析 - 良凯尔 - 博客园

【玩转腾讯云】K8s存储 —— Attach/Detach Controller与TKE现网案例分析-腾讯云开发者社区-腾讯云

K8S Pod 保护之 PodDisruptionBudget - 简书

ClusterRole | Kubernetes

配置 - 配置控制器 - 《Karmada v1.12 中文文档》 - 书栈网 · BookStack

使用启动引导令牌(Bootstrap Tokens)认证 | Kubernetes

为 Pod 或容器配置安全上下文 | Kubernetes

ClusterTrustBundle v1alpha1 | Kubernetes (K8s) 中文

kube controller manager之root-ca-cert-publisher 简单记录 - 简书

TwiN/k8s-ttl-controller: Kubernetes controller that enables timed resource deletion using TTL annotation

Kubernetes Pod 之 PodDisruptionBudget 简单理解 - 知乎

k8s学习笔记(8)— kubernetes核心组件之scheduler详解_kubernetes scheduler-CSDN博客

16. 参考 k8s集群部署时etcd容器不停重启问题及处理_6443: connect: connection refused-CSDN博客

nv-k8s - Ethereal’s Blog (ethereal-o.github.io)

k8s集群搭建 - Ethereal’s Blog (ethereal-o.github.io)

cby-chen/Kubernetes: kubernetes (k8s) 二进制高可用安装,Binary installation of kubernetes (k8s) — 开源不易,帮忙点个star,谢谢了🌹 (github.com)

Kubernetes/doc/kubeadm-install.md at main · cby-chen/Kubernetes (github.com)

DaoCloud/public-image-mirror: 很多镜像都在国外。比如 gcr 。国内下载很慢,需要加速。致力于提供连接全世界的稳定可靠安全的容器镜像服务。 (github.com)

GitHub 文件加速

nmtui修改静态IP地址,巨好用!_openeneuler系统中nmtui怎么设置ip地址-CSDN博客

Kubernetes上安装Metrics-Server - YOYOFx - 博客园

Private Registry auth config when using hosts.toml · containerd/containerd · Discussion #6468

Containerd配置拉取镜像加速 - OrcHome

GitHub 文件加速代理 - 快速访问 GitHub 文件

kubernetes(k8s) v1.30.1 helm 集群安装 Dashboard v7.4.0 可视化管理工具 图形化管理工具_helm安装指定版本kubernetes dashboard-CSDN博客

Unknown error (200): Http failure during parsing for https:///api/v1/csrftoken/login · Issue #8829 · kubernetes/dashboard

K8S 创建和查看secret(九) - 简书

Kubernetes —- Dashboard安装、访问(Token、Kubeconfig)-CSDN博客

kubeadm join-集群中加入新的master与worker节点_failed to create api client configuration from kub-CSDN博客

Kubernetes监控实战:Grafana默认用户名密码配置详解 - 云原生实践

使用 Helm Charts 部署 Grafana | Grafana 文档 - Grafana 可观测平台

更改 PersistentVolume 的回收策略 | Kubernetes

Kubernetes K8S之固定节点nodeName和nodeSelector调度详解 - 踏歌行666 - 博客园

WaitForFirstConsumer PersistentVolumeClaim等待在绑定之前创建第一个使用者-腾讯云开发者社区-腾讯云

kubeadm reset 时卡住_已解决_博问_博客园

5 kubelet证书自动续签 - 云起时。 - 博客园

Kubernetes集群更换证书(正常更新方法、和更新证书为99年)_kubectl 证书过期时间设置-CSDN博客

python抓取Prometheus的数据(使用prometheus-api-client库) - 南风丶轻语 - 博客园

[1228]Python prometheus-client使用方式-腾讯云开发者社区-腾讯云

kubernetes kubeadm方式安装配置文件目录和镜像目录-CSDN博客

修改 Kubernetes apiserver 启动参数 | Kuboard

kubesre/docker-registry-mirrors: 多平台容器镜像代理服务,支持 Docker Hub, GitHub, Google, k8s, Quay, Microsoft 等镜像仓库.

gcr.io,ghcr.io,k8s.gcr.io,quay.io 国内镜像加速服务整理分享 | Hyper Tech

Release alertmanager-1.26.0 · prometheus-community/helm-charts

K8S之Helm部署Prometheus | 左老师的课堂笔记

Prometheus+Grafana+Alertmanager实现告警推送教程 —– 图文详解 - 虚无境 - 博客园

(54 封私信 / 81 条消息) Prometheus+SpringBoot应用监控全过程详解 - 知乎

Prometheus监控组件在SpringBoot项目中使用实践_prometheus监控springboot项目-CSDN博客

使用 Prometheus 监控 Spring Boot 应用 - spring 中文网